What happens when we don’t include trans and non-binary people in our products? How do our products cause harm? Why is education so important and change so hard?

The Markup’s discovery that Google allowed advertisers to exclude non-binary people from job and housing ads, while blocking them from excluding men or women for those ads, is a great example of what happens when we don’t include or prioritize minoritized groups in our products.

How Google allowed this discrimination to happen.

TL;DR: Careless design leads to broken features that open the door for discriminating trans and non-binary people.

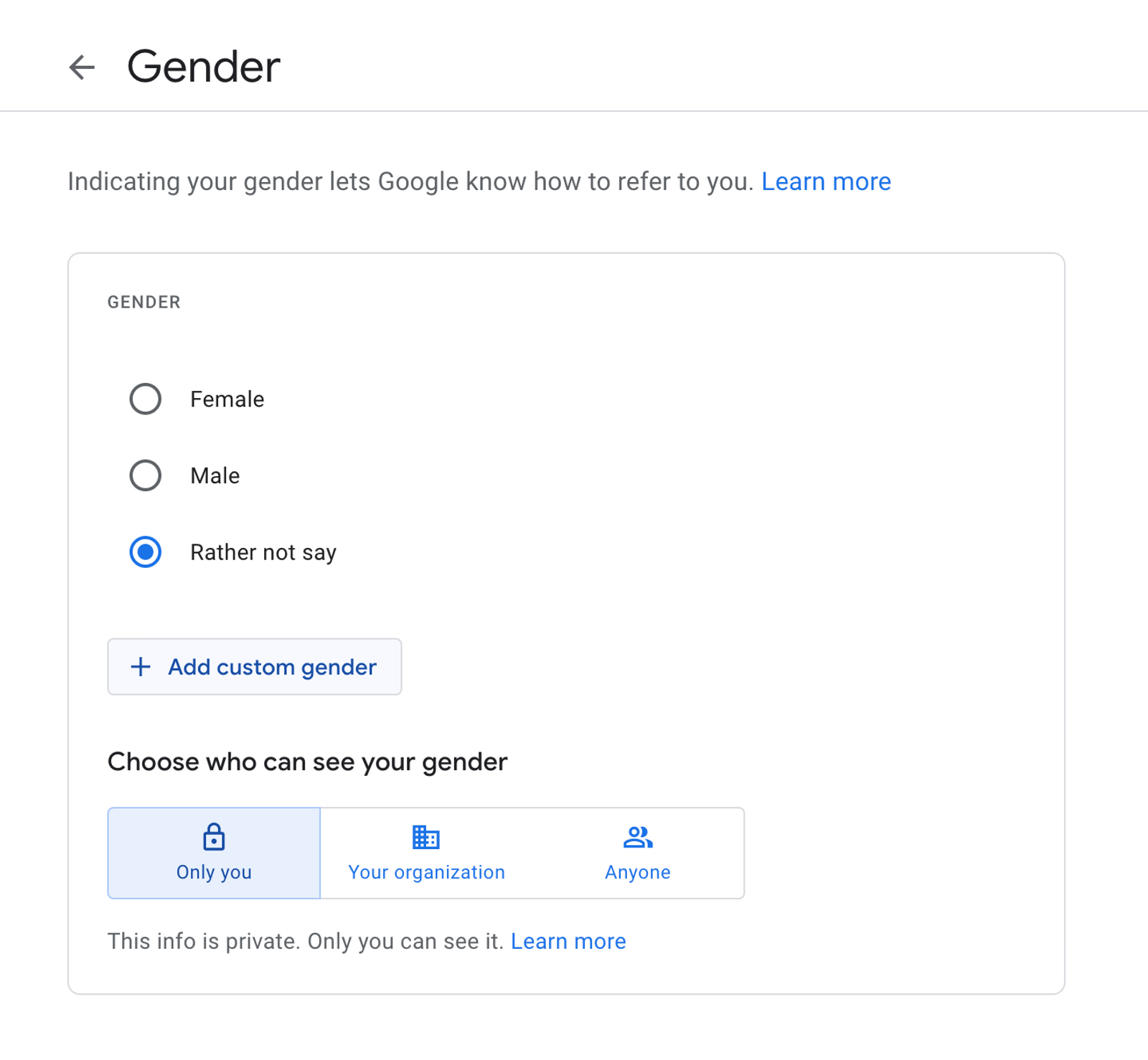

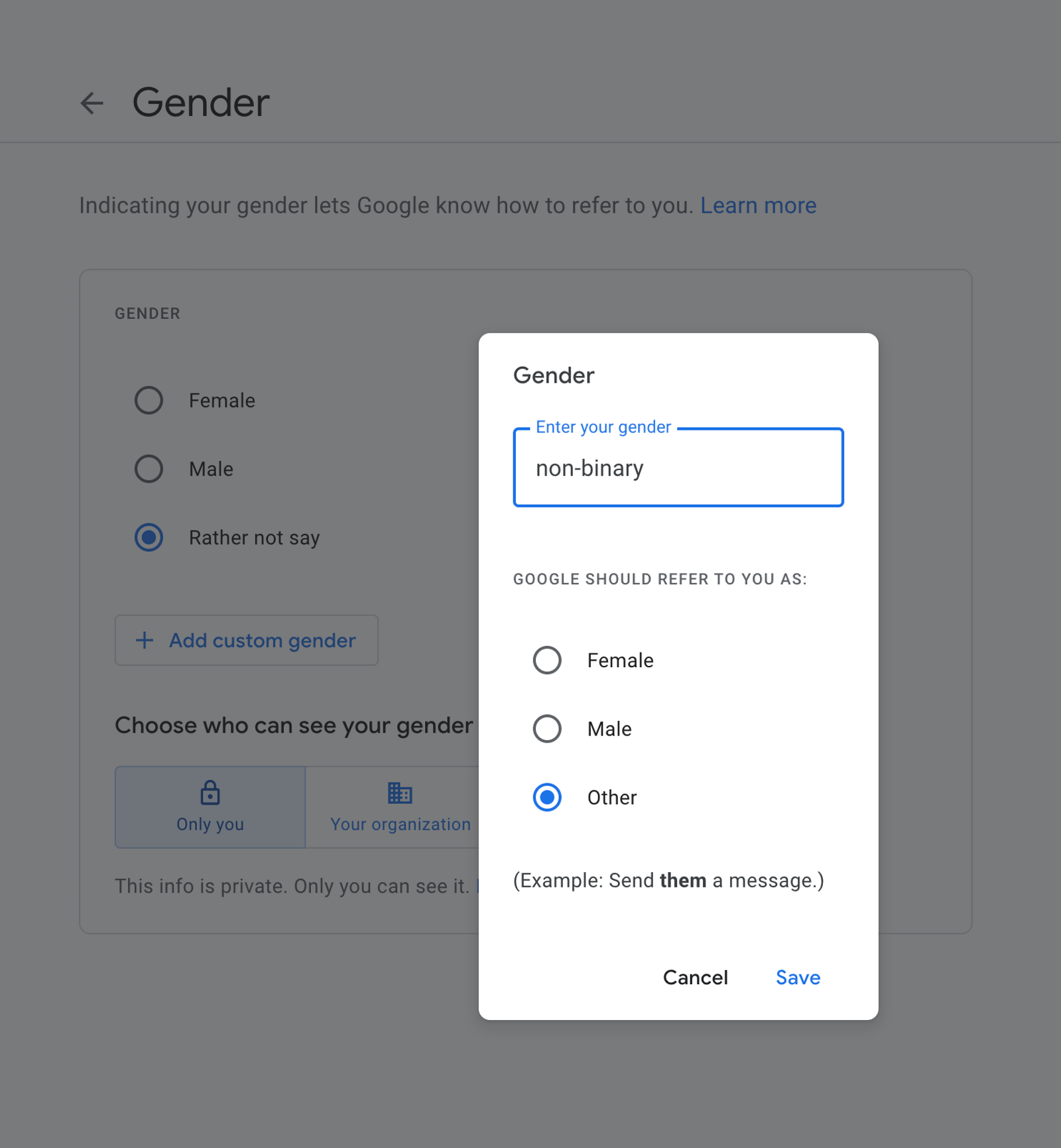

When signing up for a Google account, users are given four gender options:

- Male

- Female

- Rather Not Say

- Custom

When picking the “custom” option, users can write their gender in a text box.

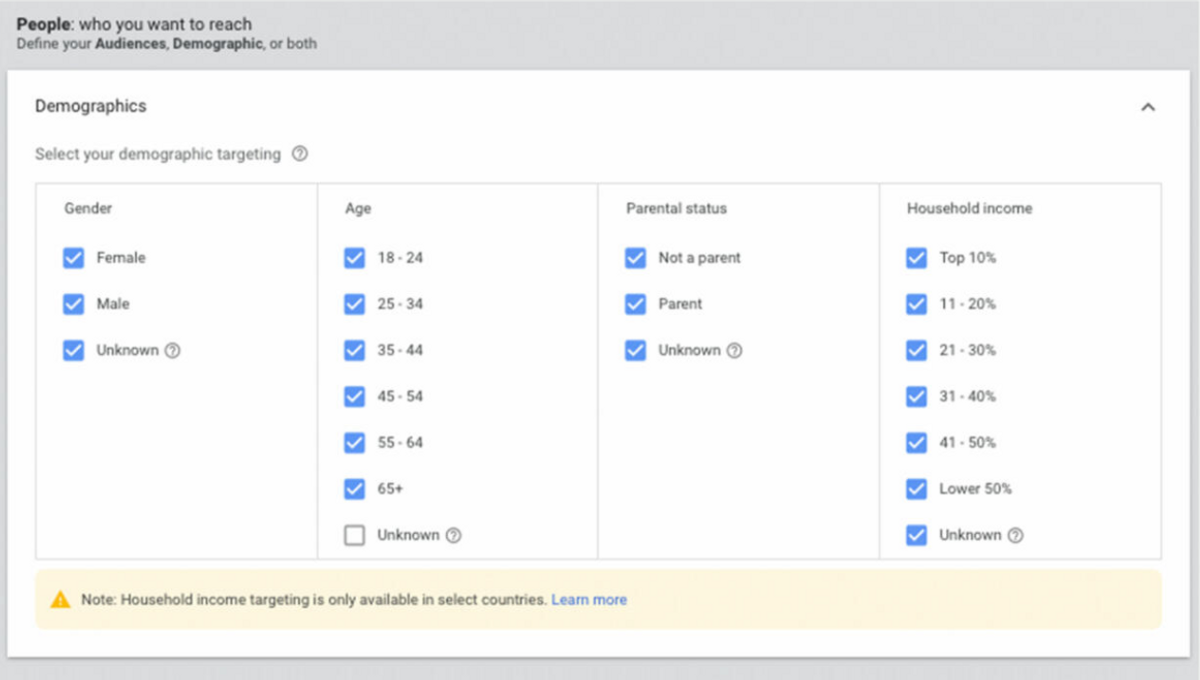

But advertisers only get three checkboxes they can use when picking an audience for their ads:

- Male

- Female

- Unknown

The Markup found that everyone who picked the “rather not say” or “custom” option in their settings gets grouped within the “unknown” category for advertisers.

Image from The Markup.

While Google doesn’t allow ads to exclude men or women from jobs, housing, or financial products, they did allow advertisers to exclude the “unknown” category, leaving those outside of the gender binary excluded as well. All of this during a time where housing, jobs and financial aid are crucial for everyone, but even more so to those who are already more often exposed to discrimination and abuse.

Alienated and erased by design.

Just the way this data gets labeled already shows how much of an afterthought gender diverse people were.

As non-binary people, we first have to indicate that we’re “other” or “custom”, and then that information gets disregarded and Google categorizes us as “unknown”. First we’re alienated, then we’re forgotten about.

We should include and prioritize the needs and safety of minoritized groups, as early in the process as possible.

The data that comes out of these gender selection boxes isn’t just sitting in a database somewhere, it’s actively being used. In this case, it decides who gets to see certain ads, and Google’s lack of care for trans and non-binary people led to vulnerable groups being excluded from housing and job ads.

But data on gender is frequently collected and used, from targeted ads and demographic research to dating apps and medicine.

If we don’t include and prioritize people from minoritized groups in our design and tech practices, we risk not only collecting incomplete or incorrect data, but also processing and using it in biased and harmful ways.

Preventing this goes beyond just hiring more trans people or doing user tests with a diverse audience, though.

While trans and non-binary people would probably have flagged the potential harm behind those features, they do need to be given trust, safety and support as well.

Just recently, Google fired Timnit Gebru and Meg Mitchell from their ethical AI team because of a research paper critical of AI systems that process language. And meanwhile Amazon is paying its employees to quit as a way to block unionization efforts.

Lack of data, visibility and accountability.

As The Markup’s story pointed out as well, it’s difficult to know which ads you’re missing out on because of your gender. While we often can get information about why we’re seeing a certain ad, we can’t ask “why am I not seeing this ad?” for ads we’re not seeing, making it hard to hold companies accountable.

Similarly, if those companies analyze our data to understand what issues we’re facing on their platform but don’t have accurate data on non-binary people, they’ll easily ignore our needs.

I’ve participated in countless of employee satisfaction surveys where non-binary wasn’t an option at all, meaning the answers of non-binary folks are miscategorized. When companies then use that data to analyze what they need to improve upon, the issues that are trans and non-binary specific are lost because there simply is no data for it.

This is why data isn’t neutral or objective, but influenced by those who collect it, and later possibly further compromised by the biases of those that use it.

Preventable accidents.

It’s easy to label these inequalities as _“accidental” _or “unintended side effects”. But how accidental or innocentis this really? This isn’t the first example of Google (and other tech companies) causing harm to minoritized people, and it won’t be the last one either.

Even Google’s gender selection form at sign-up has received criticism for a long time (I wrote about it as well), and neither ethical design nor trans people are new concepts.

Most trans and non-binary people are all but surprised that something like this could happen, given we constantly experience the consequences of trans-exclusionary design.

As I touched upon earlier, to me this shows that either no trans or non-binary folks were involved or consulted on this (which is a lack of user research as well, aka bad design), or their concerns just weren’t listened to.

We must, collectively, be better at including and protecting minoritized groups in our designs. After all, features and data don’t exist in a vacuum. Technology is so embedded into our society that even seemingly small features can cause real-world harm.

Want to learn more? There's a follow-up article on the way, follow my Patreon to get access to it a week early 👀

Hi! 👋🏻 I'm Sarah, an independent developer, designer and accessibility advocate, located in Oslo, Norway. You might have come across my photorealistic CSS drawings, my work around dataviz accessibility, or EthicalDesign.guide, a directory of learning resources and tools for creating more inclusive products. You can follow me on social media or through my newsletter or RSS feed.

💌 Have a freelance project for me or want to book me for a talk? Contact me through collab@fossheim.io.

If you like my work, consider:

Similar posts

Thursday, 16. July 2020

Navigating the internet as a non-binary designer

Wednesday, 29. July 2020